When it comes to AI adoption, schools are either innovating or imitating the school down the road. Education has its own word for this - ‘isomorphism’.

I see this everywhere I go, and it’s not unique to AI adoption. The practice of ‘borrowing ideas’ in education is a time-honoured tradition. From behaviour cards to curriculum maps, schools lift ideas from one setting to give them new life in the next. However, there is a problem with this - an idea borrowed often loses its relevance when enacted in a new setting, as the people who created that solution did so to address an issue specific to their school and setting. The same applies to the practices and pedagogy surrounding AI.

What is isomorphism?

Isomorphism explains why spatially separate schools might exhibit, in different ways, very similar practices (Puttick, 2017). Since being on the road delivering AI training to schools and MATS, I have noticed an almost tribal use of AI language, tools and wrapper Apps. This extends to the very language educators use and the narratives that shape how it’s perceived.

The thinking around isomorphism can be mapped back to a famous paper by DiMaggio and Powell from 1983. They noted that when new fields emerge, they are often characterised by diversity. When the field becomes more established, there is an inevitable push to homogenisation. In education, In education homogenisation happens when schools adopt the same policies, teaching methods, or technologies. When imitation takes hold, curriculum content or assessment approaches become standardised across different contexts, so local differences in practice fade because everyone follows the same models or guidance. Through a mix of policy mandates, the urge to copy perceived leaders, and shared professional training, AI adoption in schools follows the same path.

Homogensing forces

Educational research happens through ‘organisational fields’ (Puttick, 2017). In the AI context, this includes everyone who contributes to the field - EdTech suppliers, AI creators, policy-makers, AI consultants and influencers. Each plays a role in forming the ‘field’ that surrounds AI, but the process of formation is more nuanced when schools become involved. To examine this closer, we need to make sense of the homogenising forces that cause schools to copy each other.

Time - Schools are time poor. With limited time and capacity, schools frequently adopt ready-made ideas from peers rather than design approaches from scratch. They do this to save time on design and testing, reduce uncertainty about outcomes, demonstrate alignment with credible ‘best practice,’ and meet immediate demands without overloading staff.

Lack of AI expertise - A lack of AI expertise in schools drives isomorphism as leaders and teachers, lacking confidence to make independent decisions, tend to rely on external templates, copy perceived leaders, and follow professional norms that promote similar frameworks and tools. This practice sees us copy the ‘visible winners’ to adopt AI at pace.

Weak Leadership - Copying other schools verbatim, without adapting to the local context or critically evaluating fit, can be seen as a sign of weak leadership. It avoids the strategic thinking needed to analyse a school’s unique needs, strengths, and constraints, and it signals a lack of vision by following whatever is already being done elsewhere rather than setting a clear direction. This approach also risks a poor fit, as what works in one school may fail in another due to differences in culture, resources, or student demographics.

The ‘forces’ above show how easy it is to get AI adoption wrong. Imitation alone does not foster creative solutions or competitive advantage, and it can erode trust, with staff perceiving the leadership as reactive and unoriginal. The best way forward is to drive AI from within the school - not to copy what someone else has done.

How schools behave in the AI era

Hawley’s 1968 original definition of isomorphism calls it a ‘constraining process that forces one unit in a population to resemble other units that face the same conditions’. DiMaggio and Powell’s (1983) three mechanisms of isomorphism show us how schools are likely to act, and react in the AI era.

Coercive isomorphism

This form of isomorphism explains how pressures from laws, regulations and policy influence educational practices in schools. This forces schools to comply with a set of rules or legislation, hence they are ‘coerced’ into action. We are just seeing the beginnings of this with government departments (DfE, UK), exam regulators (JCQ) and inspection bodies (Ofsted) publishing AI documents and policy. The Curriculum and Assessment Review (CAR) in the UK will create a common framework for schools to adhere to as the operational field around AI tightens.

The impact in schools is coercive isomorphism as many will adopt similar AI-use policies, or steal /borrow them from other schools. Eventually, this will extend to the procurement procedures and safeguarding protocols because they are legally or politically required, not necessarily because they believe it’s the best pedagogical approach. The result is that, despite differing contexts, schools often end up with remarkably similar AI policy frameworks, acceptable-use agreements, and risk assessment templates.

Mimetic isomorphism

This form of isomorphism occurs when schools ‘mimic’ others in response to uncertainty. We often see this as the practice of copying the ‘visible winners’. These are the high-profile people and cases that promote a certain way of doing AI within your context under the banner of ‘best practice’.

The effect of mimetic isomorphism is two-fold. Firstly, schools model their AI strategy on perceived leaders, for example, copying another school’s AI literacy curriculum, adopting the same chatbot tool another MAT has rolled out, or structuring CPD exactly like a well-publicised AI pilot school. Next, there is the issue of ‘context’ to consider, as what suits and works for one school won’t be relevant to the next, and so on. The result is a cluster of schools that ends up with near-identical AI policies, tools, and lesson plans, even if their student demographics differ, because ‘it worked there, so we’ll do the same.’ If we think about this pragmatically, the people who designed the solution are not present in the new situation, so the expertise, enthusiasm and drive required to enact the work are lacking. Leaders in the new setting often overlook the fact that people lose trust when they lack a sense of ownership.

Normative isomorphism

Once organisational fields settle, normative isomorphism takes hold. This is a convergence driven by professional norms, training, and networks, often from professional bodies. The drivers for this include Teacher training providers, CPD networks, subject associations, and EdTech professional standards. At this stage, isomorphism becomes the ‘norm’ and is thus accepted. Here, we tend to see shared training on AI ethics, lesson design with AI, and assessment moderation using AI tools builds a common mindset. Professional associations may publish AI teaching standards or frameworks, which teachers internalise.

Normative practices follow as teachers across different schools speak the same ‘AI pedagogy’ language and use similar lesson templates, because they’ve all attended the same conferences, read the same research papers, or followed the same national CPD programme.

How to stay original and relevant in the face of homogenising forces

While coercive, mimetic, and normative forces are analytically distinct, in reality, they often intermingle. The speed and unpredictability of AI development mean mimetic isomorphism is especially strong in the short term (copying visible ‘winners’), but over time, coercive and normative forces lock those practices into policy and culture.

To stay original, here are some tips based on what I see schools doing well:

Start with your own context – Ground any AI adoption in your school’s specific needs, culture, and resources. Check your SIPs (School Improvement Plans). AI has to work for your schools, not the ones down the road.

Be careful who you follow - AI is a noisy field full of false actors and AI gurus. Follow wisely and seek out the reliable voices in the field. If you need a little help, check out the AI Sprints: STEM Learning

Adapt, don’t adopt – If you take an idea from elsewhere, rework it so it fits your students, staff capacity, and strategic goals. Involving people is the best way; ask students what they think. They are the most honest people I have ever met and will set you straight!

Build internal expertise – Invest in staff CPD so decisions are informed from within, not driven solely by external templates. I run AI Accelerator Hubs for schools and MATS to offer strategic guidance: AI Accelerator Hubs

Pilot before policy – Trial new tools or approaches in a small setting before embedding them school-wide. In-school trials with a bespoke teaching group, or within a subject area, create impact and a case study to scale up. I love this approach as it rarely fails to have a real, tangible impact in the classroom.

Invite diverse voices – Involve teachers, students, parents, and governors in shaping AI policies and practices. Inviting people in - either physically or remotely keeps your perspective fresh.

Review and refresh regularly – Keep practices under review to avoid locking in outdated or ineffective strategies.

Benchmark broadly – Look beyond your immediate peers to international examples, research, and cross-sector insights.

References

DiMaggio, P.J. and Powell, W.W., 1983. The iron cage revisited: Institutional isomorphism and collective rationality in organisational fields. American Sociological Review, 48(2), pp.147-160.

Hawley, A.H., 1968. Human ecology. In: D.L. Sills, ed. International Encyclopedia of the Social Sciences. New York: Macmillan, pp.328-337.

Puttick, S., 2017. Isomorphism in education: Why do schools and colleges look so similar? Educational Futures, 8(2), pp.47-60.

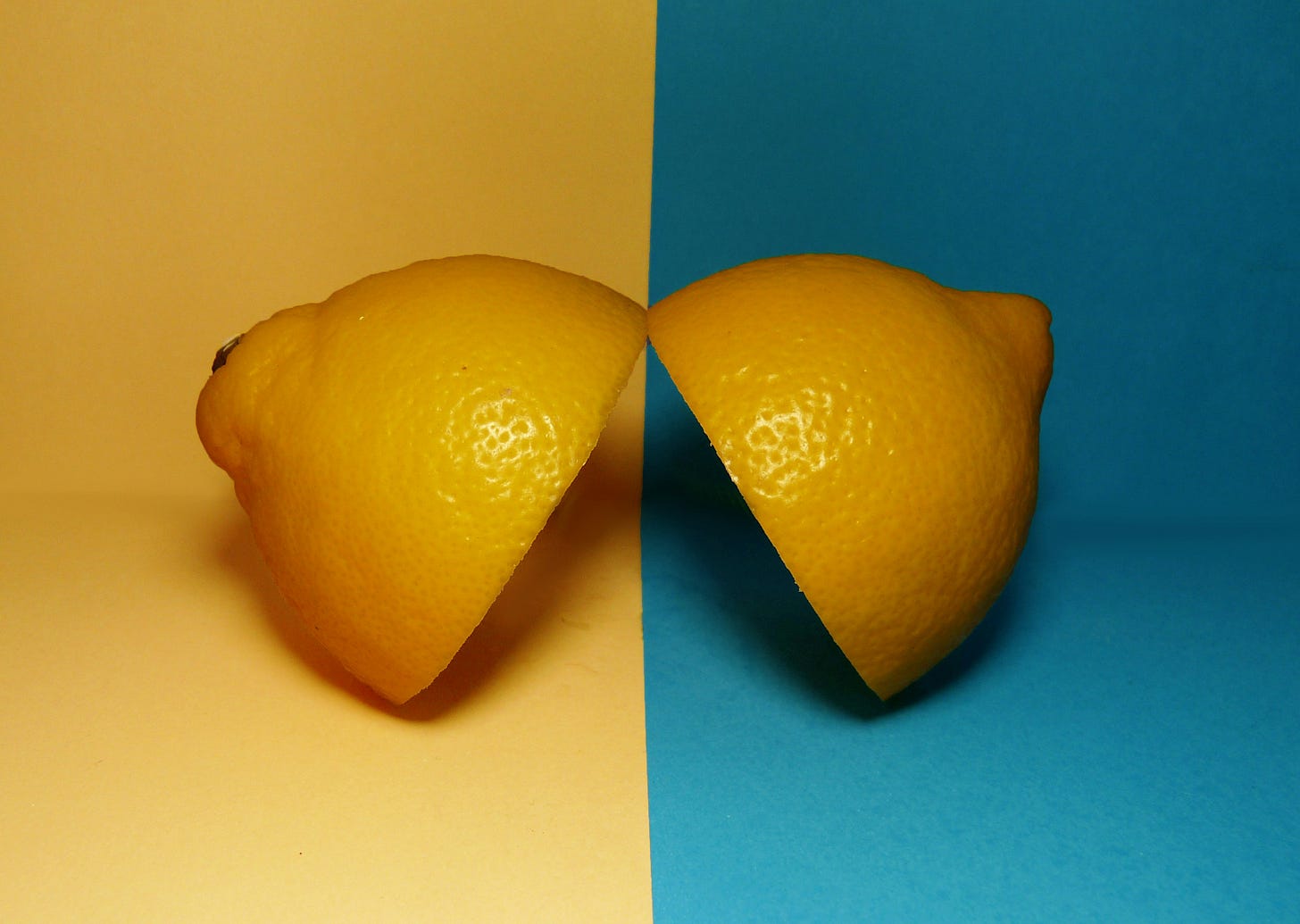

Image of two symmetrical lemons by Lewis Fagg on Unsplash

AI Accelerator Hubs run by Alex More: Hubs

AI Sprints Season 1 - STEM Learning: Catch up on Demand AI CPD